W3C Compliance

Every W3C compliant web server is supposed to have a “time taken” field available to reveal how long the web server took to process/serve a resource. However, by default, Apache and many other UNIX based web servers do not have good logging options for performance timings. NGINX and Litespeed pretty much adopt the Apache defaults. Typically the defaults aren’t the best choices for a load testing situation.

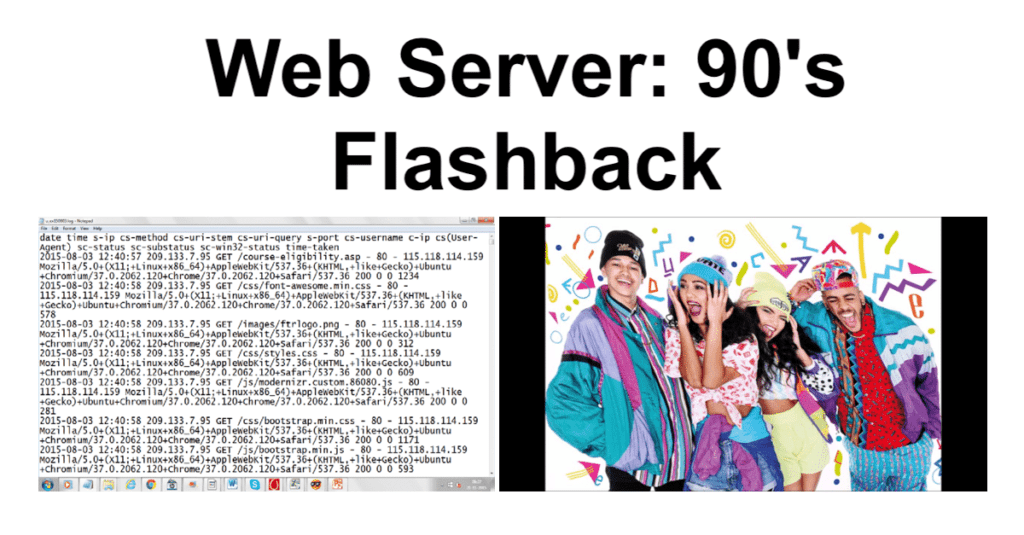

When I look at the numbers produced in the web server log, I realize they aren’t even the full story. It is only a measurement of the web servers response time, and does not indicate the full browser rendering time. However, it is useful to have these metrics to compare WITH full browser rendering. Let’s first look at the default NCSA extended/combined log format:

“%h %l %u %t \”%r\” %>s %b \”%{Referer}i\” \”%{User-agent}i\”

This standard was first released in 1993, and is based on a discontinued web server called HTTPd developed by the National Center for Supercomputing Applications, a unit of the University of Illinois at Ubana-Champaign. Many of the formatting codes are still relevant for logging. However, as we look deeper we see a glaring omission is the absence of a performance timing. I find this hard to believe. Wouldn’t most web server administrators want to know, at least at a basic level, the kind of performance metrics the web server is producing? The Apache web server formatting codes come from from a specific Apache module called “mod_log_config”. This is the code used to create the logs. We can find all of the latest codes and what they mean here:

https://httpd.apache.org/docs/2.4/mod/mod_log_config.html

Outdated Defaults

As we start matching the standardized codes to their descriptions, we can begin to understand what the access.log produced by the module reveals. The second format code is %l, which is “Remote logname (from identd, if supplied). This will return a dash unless mod_ident is present and IdentityCheck is set On.” The problem here is that ident and identd represent a protocol that is no longer being used by the majority of web applications.

https://en.wikipedia.org/wiki/Ident_protocol

There are many reasons it isn’t being used today versus in 1993, including for security reasons. The use of the %l code is probably not very useful for today’s access.logs. My suggestion is to replace this useless flag with one that will be used. There are a few performance related codes available to us:

- %D – The time taken to serve the request, in microseconds.

- %T – The time taken to serve the request, in seconds.

- %{UNIT}T – The time taken to serve the request, in a time unit given by UNIT. Valid units are ms for milliseconds, us for microseconds, and s for seconds. Using s gives the same result as %T without any format; using us gives the same result as %D. Combining %T with a unit is available in 2.4.13 and later. We are using version 2.4.29

Although I am admittedly biased about including performance metrics, there are probably other codes useful for other reasons. It’s worth the time to go through each of the NCSA standard codes in the mod_log_config module to determine what the best logging format is for each situation.

Let’s Make It Better

If you need a place to start, I suggest to use all three, with the UNIT of ms for the third option. Replace the %I code for ident with the %D code and add the other two timings on the end. I got the idea from this URL:

https://fplanque.com/dev/linux/how-to-log-request-processing-times-in-apache

An even more useful option may be to set up a format that outputs in JSON nicknamed AccessLogJSON:

LogFormat “{ \”time\”:\”%t\”, \”remoteIP\”:\”%a\”, \”host\”:\”%V\”, \”request\”:\”%U\”, \”query\”:\”%q\”, \”method\”:\”%m\”, \”status\”:\”%>s\”, \”userAgent\”:\”%{User-agent}i\”, \”referer\”:\”%{Referer}i\”, \”processingtime\”:\”%D\” }” AccessLogJSON

Once something is in the JSON format, it can be easily parsed programmatically and visualized in a myriad of modern solutions. I think the key is getting to fast visualizations and then using additional tooling to look for performance variations and anomalies. This is just for the web server log. It is just one piece of the bigger performance puzzle, but it helps a lot. With the proper monitoring as described, you will more easily handle triage situations. Then the performance testing team can understand more about how things look in production today and compare with the test results in the QA/Staging environments.

Summary

If you are relying on the “out of the box” logging options from the web server vendor, you may be stuck in the 90’s with monitoring. Based on this information, isn’t it time we modernized the default choices and include more relevant data in an easy to ingest format? For those serving on the web server working groups, perhaps take a look at this when you get some time.